Dense Relation Distillation with Context-aware Aggregation for Few-Shot Object Detection

基于上下文感知聚合的稠密关系提取用于少样本目标检测

论文地址:https://arxiv.org/pdf/2007.12107v1.pdfhttps://arxiv.org/pdf/2103.17115.pdfhttps://arxiv.org/pdf/2007.12107v1.pdf

代码地址:GitHub - hzhupku/DCNet: Dense Relation Distillation with Context-aware Aggregation for Few-Shot Object Detection, CVPR 2021

摘要

Conventional deep learning based methods for object detection require a large amount of bounding box annotations for training, which is expensive to obtain such high quality annotated data. Few-shot object detection, which learns to adapt to novel classes with only a few annotated examples, is very challenging since the fine-grained feature of novel object can be easily overlooked with only a few data available. In this work, aiming to fully exploit features of annotated novel object and capture fine-grained features of query object, we propose Dense Relation Distillation with Context-aware Aggregation (DCNet) to tackle the few-shot detection problem. Built on the meta-learning based framework, Dense Relation Distillation module targets at fully exploiting support features, where support features and query feature are densely matched, covering all spatial locations in a feed-forward fashion. The abundant usage of the guidance information endows model the capability to handle common challenges such as appearance changes and occlusions. Moreover, to better capture scale-aware features, Context-aware Aggregation module adaptively harnesses features from different scales for a more comprehensive feature representation. Extensive experiments illustrate that our proposed approach achieves state-of-the-art results on PASCAL VOC and MS COCO datasets. Code will be made available at https://github.com/hzhupku/DCNet.

传统的基于深度学习的目标检测方法需要大量的边界框标注来进行训练,这对于获得这样高质量的标注数据来说是非常昂贵的。小样本目标检测是一种非常具有挑战性的检测方法,它可以通过很少的注释示例来学习如何适应新的类,因为只有很少的可用数据,新目标的细粒度特征很容易被忽略。在这项工作中,为了充分利用带注释的新目标的特征并捕获查询目标的细粒度特征,我们提出了基于上下文感知聚合的稠密关系提取(DCNet)来解决少样本检测问题。密集关系提取模块建立在基于元学习的框架之上,旨在充分利用支持特征,其中支持特征和查询特征紧密匹配,以前馈方式覆盖所有空间位置。导航信息的大量使用赋予了模型处理外观变化和遮挡等常见挑战的能力。此外,为了更好地捕获规模感知特征,上下文感知聚合模块自适应地利用不同规模的特征,以实现更全面的特征表示。大量实验表明,我们提出的方法在PASCAL VOC和MS COCO数据集上取得了最新的结果。代码将在以下位置提供:https://github.com/hzhupku/DCNet.

1 介绍

With the success of deep convolutional neural works, object detection has made great progress these years [20,23, 8]. The success of deep CNNs, however, heavily relies on large-scale datasets such as ImageNet [2] that enable the training of deep models. When the labeled data becomes scarce, CNNs can severely overfit and fail to generalize. While in contrast, human beings have exhibited strong performance in learning a new concept with only a few examples available. Since some object categories naturally have scarce examples or bounding box annotations are laborsome to obtain such as medical data. These problems have triggered increasing attentions to deal with learning models with limited examples. Few-shot learning aims to train models to generalize well with a few examples provided. However, most existing few-shot learning works focus on image classification [29, 26, 27] problem and only a few focus on few-shot object detection problem. Since object detection not only requires class prediction, but also demands localization of the object, making it much more difficult than few-shot classification task.

随着深度卷积神经工程的成功,近年来目标检测取得了巨大进展[20,23,8]。然而,深层CNN的成功在很大程度上依赖于大型数据集,如ImageNet[2],这些数据集支持深层模型的训练。当标记的数据变得稀少时,CNN可能会严重过度拟合,无法概括。相比之下,人类在学习新概念方面表现出了很强的表现,只有少数例子可用。由于某些目标类别自然缺乏示例,或者很难获得边界框注释,例如医疗数据。这些问题引起了人们越来越多的关注,以处理示例有限的学习模型。少样本学习旨在通过提供的几个示例来训练模型,使其能够很好地概括。然而,现有的大多数少样本学习工作都集中在图像分类问题上,只有少量工作集中在少样本目标检测问题上。由于目标检测不仅需要类别预测,还需要对目标进行定位,这使得它比少样本分类任务困难得多。

Prior studies in few-shot object detection mainly consist of two groups. Most of them [13, 35, 34] adopt a metalearning [5] based framework to perform feature reweighting for a class-specific prediction. While Wang et al. [31] adopt a two-stage fine-tuning approach with only finetuning the last layer of detectors and achieve state-of-the-art performance. Wu et al. [33] also use similar strategy and focus on the scale variation problem in few-shot detection.

以往的少样本目标检测研究主要分为两组。其中大多数采用了基于MetalLearning的框架来对特定于类的预测执行特征重新加权。而Wang等人【31】采用了两阶段微调方法,仅微调最后一层探测器,实现了最先进的性能。Wu等人[33]也使用了类似的策略,并专注于少样本检测中的尺度变化问题。

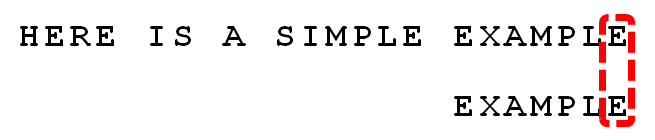

However, aforementioned methods often suffer from several drawbacks due to the challenging nature of fewshot object detection. Firstly, relations between support features and query feature are hardly fully explored in previous few-shot detection works, where global pooling operation on support features is mostly adopted to modulate the query branch, which is prone to loss of detailed local context. Specifically, appearance changes and occlusions are common for objects, as shown Fig. 1. Without enough discriminative information provided, the model is obstructed from learning critical features for class and bounding box predictions. Secondly, although scale variation problem has been widely studied in prior works [17, 15, 33], it remains a serious obstacle in few-shot detection tasks. Under fewshot settings, feature extractor with scale-aware modifications is inclined to overfitting, leading to a deteriorated performance for both base and novel classes.

然而,由于少样本目标检测的挑战性,上述方法通常存在一些缺点。首先,在之前的少样本目标检测工作中,支持特征和查询特征之间的关系几乎没有得到充分的研究,支持特征的全局池操作大多用于调整查询分支,这容易丢失详细的局部上下文。具体而言,外观变化和遮挡对于目标来说是常见的,如图1所示。如果没有足够的鉴别信息,模型将无法学习类和边界框预测的关键特征。其次,尽管在之前的工作中已经广泛研究了尺度变化问题[17、15、33],但它仍然是少样本检测任务中的一个严重障碍。在少样本设置下,具有尺度感知修改的特征提取器倾向于过度拟合,从而导致基本类和新类的性能下降。

Figure 1. Two challenges for few-shot object detection. a) Appearance changes between support and query images are common, which results in a misleading manner. b) Occlusion problem brings about incomplete feature representation, causing false classification and missing detection. 少样本目标检测面临两大挑战。a) 支持图像和查询图像之间的外观更改很常见,这会导致误导。b) 遮挡问题导致特征表示不完整,导致分类错误和检测缺失。

In order to alleviate the above issues, we first propose the dense relation distillation module to fully exploit support set. Given a query image and a few support images from novel classes, the shared feature learner extracts query feature and support features for subsequent matching procedure. Intuitively, the criteria that determines whether query object and support object belong to the same category mainly measures how much feature similarity they share in common. When appearance changes or occlusions occur, local detailed features are dominant for matching candidate objects and template ones. Hence, instead of obtaining global representations of support set, we propose a dense relation distillation mechanism where query and support features are matched in a pixel-wise level. Specifically, key and value maps are produced from features, which serve as encoding visual semantics for matching and containing detailed appearance information for decoding respectively. With local information of support set effectively retrieved for guidance, the performance can be significantly boosted, especially in extremely low-shot scenarios

为了缓解上述问题,我们首先提出了稠密关系提取模块来充分利用支持集。共享特征学习器从新类中提取一个查询图像和几个支持图像,为后续匹配过程提取查询特征和支持特征。直观地说,确定查询目标和支持目标是否属于同一类别的标准主要衡量它们共享的特征相似度。当出现外观变化或遮挡时,局部细节特征是匹配候选目标和模板目标的主要特征。因此,我们提出了一种稠密关系提取机制,在像素级匹配查询和支持特征,而不是获取支持集的全局表示。具体来说,键和值映射是由特征生成的,这些特征作为编码视觉语义进行匹配,并分别包含用于解码的详细外观信息。通过有效检索支持集的本地信息进行引导,可以显著提高性能,尤其是在极少样本场景中。

Furthermore, for the purpose of mitigating the scale variation problem, we design the context-aware feature aggregation module to capture essential cues for different scales during RoI pooling. Since directly modifying feature extractor could result in overfitting, we choose to perform adjustment from a more flexible perspective. Recognition of objects with different scales requires different levels of contextual information, while the fixed pooling resolution may bring about loss of substantial context information. Hence, an adaptive aggregation mechanism that allocates specific attention to local and global features simultaneously could help preserve contextual information for different scales of objects. Therefore, instead of performing RoI pooling with one fixed resolution, we choose three different pooling resolutions to capture richer context features. Then an attention mechanism is introduced to adaptively aggregate output features to present a more comprehensive representation.

此外,为了缓解规模变化问题,我们设计了上下文感知的特征聚合模块,以在RoI池期间捕获不同规模的关键线索。由于直接修改特征提取器可能会导致过度拟合,因此我们选择从更灵活的角度执行调整。不同尺度对象的识别需要不同层次的上下文信息,而固定的池分辨率可能会导致大量上下文信息的丢失。因此,同时将特定注意力分配给局部和全局特征的自适应聚合机制有助于为不同规模的对象保留上下文信息。因此,我们没有使用一个固定的分辨率执行RoI池,而是选择三种不同的池分辨率来捕获更丰富的上下文特征。然后引入注意机制,自适应地聚合输出特征,以提供更全面的表示。

The contributions of this paper can be summarized as follows:

1. We propose a dense relation distillation module for few-shot detection problem, which targets at fully exploiting support information to assist the detection process for objects from novel classes.

2. We propose an adaptive context-aware feature aggregation module to better capture global and local features to alleviate scale variation problem, boosting the performance of few-shot detection.

3. Extensive experiments illustrate that our approach has achieved a consistent improvement on PASCAL VOC and MS COCO datasets. Specially, our approach achieves better performance than the state-of-the-art methods on the two datasets.

本文的贡献总结如下:

1、针对少样本检测问题,我们提出了一个稠密关系蒸馏模块,旨在充分利用支持信息来辅助新类目标的检测过程。

2、我们提出了一种自适应上下文感知特征聚合模块,以更好地捕获全局和局部特征,缓解尺度变化问题,提高了少样本检测的性能。

3.大量实验表明,我们的方法在PASCAL VOC和MS COCO数据集上取得了一致的改进。特别是,我们的方法在这两个数据集上取得了比最新方法更好的性能。

2 相关工作

2.1. General Object Detection

Deep learning based object detection can be mainly divided into two categories: one-stage and two-stage detectors. One-stage detector YOLO series [20, 21, 22] provide a proposal-free framework, which uses a single convolutional network to directly perform class and bounding box predictions. SSD [18] uses default boxes to adjust to various object shapes. On the other hand, RCNN and its variants [7, 9, 6, 23, 8] fall into the second category. These methods first extract class-agnostic region proposals of the potential objects from a given image. The generated boxes are then further refined and classified into different categories by subsequent modules. Moreover, many works are proposed to handle scale variance [17, 15, 24, 25]. Compared to one-stage methods, two-stage methods are slower but exhibit better performance. In our work, we adopt Faster RCNN as the base detector.

基于深度学习的目标检测主要分为两类:一级检测器和两级检测器。单级检测器YOLO系列【20、21、22】提供了一个无建议的框架,它使用单个卷积网络直接执行类和边界框预测。SSD[18]使用默认框来调整各种目标形状。另一方面,RCNN及其变体【7、9、6、23、8】属于第二类。这些方法首先从给定的图像中提取潜在目标的类不可知区域建议。随后,生成的方框将进一步细化,并由后续模块划分为不同的类别。此外,还提出了许多处理规模差异的工作【17、15、24、25】。与单阶段方法相比,两阶段方法速度较慢,但表现出更好的性能。在我们的工作中,我们采用Faster RCNN作为基本检测器。

2.2. Few-Shot Learning

Few-shot learning aims to learn transferable knowledge that can be generalized to new classes with scarce examples. Bayesian inference is utilized in [4] to generalize knowledge from a pretrained model to perform one-shot learning. Meta-learning based methods have been prevalent in few-shot learning these days. Metric learning based methods [16, 29, 26, 27] have achieved state-of-the-art performance in few-shot classification tasks. Matching Network [29] encodes input into deep neural features and performs weighted nearest neighbor matching to classify query images. Our proposed method is also based on matching mechanism. Prototypical Network [26] represents each class with one prototype which is a feature vector. Relation Network [27] learns a distance metric to compare the target image with a few labeled images. While optimization based methods [19, 5] are proposed for fast adaptation to new few-shot task. [11] proposes a cross-attention mechanism to learn correlations between support and query images. Above methods are focusing on the few-shot classification task while few-shot object detection problem is relatively under-explored.

少样本学习旨在学习可转移的知识,这些知识可以推广到缺乏实例的新类别中。文献[4]中利用贝叶斯推理从预训练模型中概括知识,以执行一次性学习。最近,基于元学习的方法在少样本学习中很流行。基于度量学习的方法【16、29、26、27】在少样本分类任务中取得了最先进的性能。匹配网络【29】将输入编码为深层神经特征,并执行加权最近邻匹配以对查询图像进行分类。我们提出的方法也基于匹配机制。原型网络(Prototypic Network)[26]用一个作为特征向量的原型来表示每个类。关系网络[27]学习一个距离度量,将目标图像与一些标记图像进行比较。而基于优化的方法【19,5】被提出用于快速适应新的少样本任务。[11] 提出了一种交叉注意机制来学习支持图像和查询图像之间的相关性。上述方法主要针对少样本分类任务,而对少镜头目标检测问题的研究相对较少。

2.3. Few-Shot Object Detection

Few-shot object detection aims to detect object from novel classes with only a few annotated training examples provided. LSTD [1] and RepMet [14] adopt a general transfer learning framework which reduces overfitting by adapting pre-trained detectors to few-shot scenarios. Recently, Meta YOLO [13] designs a novel few-shot detection model with YOLO v2 [21] that learns generalizable meta features and automatically reweights the features for novel classes by producing class-specific activating coefficients from support examples. Meta R-CNN [35] and FsDetView [34] perform similar process with base detector as Faster R-CNN. TFA [31] simply performs two-stage finetuning approach by only finetuning the classifier on the second stage and achieves better performance. MPSR [33] proposes multiscale positive sample refinement to handle scale variance problem. CoAE [12] proposes non-local RPN and focuses on one-shot detection from the view of tracking by comparing itself with other tracking methods, while our method performs cross-attention on features extracted by the backbone in a more straightforward way and targets at fewshot detection task. FSOD [3] proposes attention-RPN, multi-relation detector and contrastive training strategy to detect novel object. In our work, we adopt the similar meta-learning based framework as Meta R-CNN and further improve the performance. Moreover, with our proposed method, the class-specific prediction procedure can be successfully removed, simplifying the overall process.

少样本目标检测的目标是从新类中检测目标,只提供了几个带注释的训练示例。LSTD【1】和RepMet【14】采用了一个通用的迁移学习框架,该框架通过将预先训练的检测器调整到少样本场景来减少过度拟合。最近,Meta-YOLO【13】利用YOLO v2【21】设计了一种新的少样本检测模型,该模型学习可概括的元特征,并通过从支持示例生成特定于类的激活系数,自动为新类的特征重新加权。Meta R-CNN【35】和FsDetView【34】与Faster R-CNN使用基本检测器执行类似的过程。TFA【31】只需在第二阶段微调分类器,即可简单地执行两阶段微调方法,并获得更好的性能。MPSR【33】提出多尺度正样本精化来处理尺度方差问题。CoAE【12】提出了非局部RPN,并通过与其他跟踪方法进行比较,从跟踪的角度关注一次性检测,而我们的方法则以更直接的方式对主干提取的特征和fewshot检测任务中的目标进行交叉关注。FSOD[3]提出了注意RPN、多关系检测器和对比训练策略来检测新目标。在我们的工作中,我们采用了与meta R-CNN类似的基于元学习的框架,并进一步提高了性能。此外,使用我们提出的方法,可以成功地删除特定于类的预测过程,从而简化整个过程。

3 方法

3.1. Preliminaries

Problem Definition. Following setting in [13, 35], object classes are divided into base classes Cbase with abundant annotated data and novel classes Cnovel with only a few annotated samples, where Cbase and Cnovel have no intersection. We aim to obtain a few-shot detection model with the ability to detect objects from both base and novel classes in testing by leveraging generalizable knowledge from base classes. The number of instances per category for novel classes is set as k (i.e., k-shot).

问题定义。按照【13,35】中的设置,目标类被分为基类Cbase和新类Cnovel,其中Cbase和Cnovel没有交集,基类Cbase有丰富的注释数据,而新类Cnovel只有少量的注释样本。我们的目标是通过利用基类中的可推广知识,获得一种能够在测试中从基类和新类中检测对象的少样本检测模型。新类的每个类别的实例数设置为k(即k-shot)。

We align the training scheme with the episodic paradigm [29] for few-shot scenario. Given a k-shot learning task, each episode is constructed by sampling: 1) a support set containing image-mask pairs for different classes S =![]() , where

, where ![]() is an RGB image, yi 2Rh×w is a binary mask for objects of class i in the support image generated from bounding box annotations and N is the number of classes in the training set; 2) a query image q and annotations m for the training classes in the query image. The input to the model is the support pairs and query image, the output is detection prediction for query image.

is an RGB image, yi 2Rh×w is a binary mask for objects of class i in the support image generated from bounding box annotations and N is the number of classes in the training set; 2) a query image q and annotations m for the training classes in the query image. The input to the model is the support pairs and query image, the output is detection prediction for query image.

对于少样本场景,我们将训练方案与情节范式[29]相结合。给定一个k-shot学习任务,每一集都是通过抽样来构建的:1)一个支持集,包含不同类别的图像掩码对S=![]() ,其中

,其中![]() 是RGB图像,yi ∈

是RGB图像,yi ∈![]() 是由边界框注释生成的支持图像中类i目标的二进制掩码,N是训练集中的类数;2) 查询图像q和用于查询图像中的训练类的注释m。模型的输入是支持对和查询图像,输出是查询图像的检测预测。

是由边界框注释生成的支持图像中类i目标的二进制掩码,N是训练集中的类数;2) 查询图像q和用于查询图像中的训练类的注释m。模型的输入是支持对和查询图像,输出是查询图像的检测预测。

Basic Object Detection. The choice of base detectors is varied. [13] utlizes YOLO v2 [21] which is a one-stage detector, while [35] adopts Faster R-CNN [23] which is a two-stage detector and provides consistently better results. Therefore, we also adopt Faster R-CNN as our base detector which consists of a feature extractor, region proposal network (RPN) and the detection head (RoI head).

基础目标检测。基底探测器的选择多种多样。[13] utlizes YOLO v2【21】是一种单级检测器,而【35】采用Faster R-CNN【23】是一种两级检测器,并始终提供更好的结果。因此,我们也采用了Faster R-CNN作为我们的基本检测器,它由特征提取器、区域建议网络(RPN)和检测头(RoI头)组成。

Feature Reweighting for Detection. We choose MetaRCNN [35] as our baseline method. Formally, let I denote an input query image, ![]() denote support images and masks converted from bounding-box annotations, where N is the number of training classes. RoI features

denote support images and masks converted from bounding-box annotations, where N is the number of training classes. RoI features ![]() is generated by the RoI pooling layer (n is the number of RoIs) and class-specific vectors

is generated by the RoI pooling layer (n is the number of RoIs) and class-specific vectors ![]() ; i = 1, 2,...., N are produced with a reweighting module which shares its backbone parameters with the feature extractor, where C is the feature dimension. Then class-specific feature zi is achieved with:

; i = 1, 2,...., N are produced with a reweighting module which shares its backbone parameters with the feature extractor, where C is the feature dimension. Then class-specific feature zi is achieved with:

特征更新权重检测。我们选择MetaRCNN【35】作为我们的基线方法。形式上,让我表示一个输入查询图像![]() 表示从边界框注释转换的支持图像和掩码,其中N是训练类的数量。RoI特征

表示从边界框注释转换的支持图像和掩码,其中N是训练类的数量。RoI特征![]() 由RoI池层(n是RoI的数量)和类特定向量

由RoI池层(n是RoI的数量)和类特定向量![]() 生成;i = 1, 2,...., N 是由一个重新加权模块生成的,该模块与特征提取器共享其主干参数,其中C是特征维度。然后通过以下方式实现特定于类的特征zi:

生成;i = 1, 2,...., N 是由一个重新加权模块生成的,该模块与特征提取器共享其主干参数,其中C是特征维度。然后通过以下方式实现特定于类的特征zi:

where ⊗ denotes channel-wise multiplication. Then classspecific prediction is performed to output the detection results. Based on this methodology, we further make a significant improvement and simplify the prediction procedure by removing the class-specific prediction.

其中⊗ 表示按通道乘法。然后执行特定于类的预测以输出检测结果。基于这种方法,我们进一步进行了重大改进,并通过删除特定于类的预测来简化预测过程。

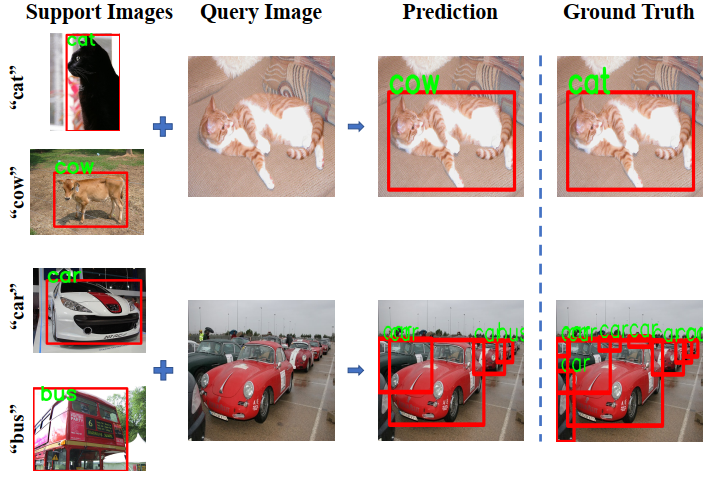

3.2. DCNet

As illustrated in Fig. 2, we present the Dense Relation Distillation (DRD) module with Context-aware Feature ggregation (CFA) module to fully exploit support features and capture essential context information. The two proposed components form the final model DCNet. We will first depict the architecture of the proposed DRD module. Then we will bring out the details of the CFA module.

如图2所示,我们提出了稠密关系蒸馏(DRD)模块和上下文感知特征聚合(CFA)模块,以充分利用支持特征并捕获基本上下文信息。这两个提议的组件构成了最终的DCNet模型。我们将首先描述所提议的DRD模块的体系结构。然后,我们将介绍CFA模块的详细信息。

Figure 2. The overall framework of our proposed DCNet. For training, the input for each episode consists of a query image and N support image-mask pairs from N classes. The shared feature extractor first produces query feature and support features. Then, the dense relation distillation (DRD) module performs dense feature match to activate co-exisiting features of input query. With proposals produced by RPN, context-aware feature aggregation (CFA) module adaptively harnesses features generated with different scales of pooling operations, capturing different levels of features for a more comprehensive representation 我们提出的DCNet的总体框架。对于训练,每集的输入由一个查询图像和N个类中的N个支持图像掩码对组成。共享特征提取器首先生成查询特征和支持特征。然后,稠密关系提取(DRD)模块进行稠密特征匹配,激活输入查询的共存特征。通过RPN生成的方案,上下文感知特征聚合(CFA)模块自适应地利用不同规模的池操作生成的特征,捕获不同级别的特征,以获得更全面的表示。

3.2.1 Dense Relation Distillation Module

Key and Value Embedding. Given a query image and support set, query and support features are produced by feeding them into the shared feature extractor. The input of the dense relation distillation (DRD) module is the query feature and support features. Both parts are first encoded into pairs of key and value maps through the dedicated deep encoders. The query encoder and support encoder adopt the same structure while not sharing parameters

关键和价值嵌入。给定一个查询图像和支持集,通过将查询和支持特征馈送到共享特征提取器来生成查询和支持特征。密集关系提取(DRD)模块的输入是查询特征和支持特征。这两部分首先通过专用的深度编码器编码成成对的键和值映射。查询编码器和支持编码器采用相同的结构,但不共享参数。

The encoder takes one or multiple feature as input and outputs two feature maps for each input feature: key and value with two parallel 3 × 3 convolution layers, which serve as reducing the dimension of the input feature to save computation cost. Specifically, key maps are used for measuring the similarities between query feature and support features, which help determine where to retrieve relevant support values. Therefore, key maps are learned to encode visual semantics for matching and value maps store detailed information for recognition. Hence, for query feature, the output is a pair of key and value maps: kq ∈ ![]() , where C is the feature dimension, H is the height, and W is the width of input feature map. For support features, each of the features is independently encoded into key and value maps, the output is

, where C is the feature dimension, H is the height, and W is the width of input feature map. For support features, each of the features is independently encoded into key and value maps, the output is ![]() , where N is the number of target classes (also the number of support samples). The generated key and value maps are further fed into the relation distillation part where keys maps of query and support are densely matched for addressing target objects.

, where N is the number of target classes (also the number of support samples). The generated key and value maps are further fed into the relation distillation part where keys maps of query and support are densely matched for addressing target objects.

编码器将一个或多个特征作为输入,并为每个输入特征输出两个特征映射:键和值,以及两个平行的3×3卷积层,以减少输入特征的维数,从而节省计算成本。具体而言,关键映射用于测量查询功能和支持功能之间的相似性,这有助于确定从何处检索相关支持值。因此,关键映射被学习为匹配编码视觉语义,值映射存储详细信息以供识别。因此,对于查询特征,输出是一对键和值映射:kq∈ ![]() ,其中C是特征尺寸,H是高度,W是输入特征地图的宽度。对于支持特征,每个特征独立编码为键和值映射,输出为

,其中C是特征尺寸,H是高度,W是输入特征地图的宽度。对于支持特征,每个特征独立编码为键和值映射,输出为![]() ,其中N是目标类的数量(也是支持样本的数量)。生成的键和值映射被进一步馈送到关系提取部分,其中查询和支持的键映射被紧密匹配以寻址目标对象。

,其中N是目标类的数量(也是支持样本的数量)。生成的键和值映射被进一步馈送到关系提取部分,其中查询和支持的键映射被紧密匹配以寻址目标对象。

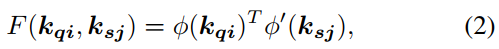

Relation Distillation. After acquiring the key/value maps of query and support features, relation distillation is performed. As illustrated in Fig. 2, soft weights for value maps of support features are computed via measuring the similarities between key maps of query feature and support features. The pixel-wise similarity is performed in a non-local manner, formulated as:

关系蒸馏。在获取查询和支持特征的键/值映射后,执行关系蒸馏。如图2所示,通过测量查询特征和支持特征的关键映射之间的相似性来计算支持特征的值映射的软权重。像素级相似性以非局部方式执行,公式如下:

where i and j are the index of the query and support location, φ,φ' denote two different linear transformations with parameters learned via back propagation during training process, forming a dynamically learned similarity function. After computing the similarity of pixel features, we perform softmax normalization to output the final weight W :

其中i和j是查询和支持位置的索引,φ,φ'表示两个不同的线性变换,在训练过程中通过反向传播学习参数,形成动态学习的相似函数。在计算像素特征的相似度后,我们执行softmax归一化以输出最终权重W:

Then the value of the support features are retrieved by a weighted summation with the soft weights produced and then it is concatenated with the value map of query feature. Hence, the final output is formulated as:

然后,通过对生成的软权重进行加权求和来检索支持特征的值,然后将其与查询特征的值映射连接起来。因此,最终输出公式如下:

where ∗ denotes matrix inner-product. Noted that there are N support features, which brings N key-value pairs. We perform summation over N output results to obtain the final result, which is a refined query feature, activated by support features where there are co-existing classes of objects in query and support images.

其中∗ 表示矩阵内积。注意,有N个支持特性,这带来了N个键值对。我们对N个输出结果进行求和,以获得最终结果,这是一个细化的查询特征,由支持特征激活,其中查询和支持图像中存在共存的目标类。

Previous trials [13, 35, 34] utilize class-wise vectors generated by global pooling of support features to modulate the query feature, which guide the feature learning from a holistic view. However, since appearance changes or occlusions are common in natural images, the holistic feature may be misleading when objects of the same class vary much between query and support samples. Also, when most parts of the objects are unseen due to the occlusions, the retrieval of local detailed features becomes substantial, which former methods completely neglect. Hence, equipped with the dense relation distillation module, pixel-level relevant information can be distilled from support features. As long as there exist some common characteristics, the pixels of query features belonging to the co-existing objects between query and support samples will be further activated, providing a robust modulated feature to facilitate the prediction of class and bounding-box.

之前的试验【13、35、34】利用支持特性的全局池生成的类向量来调整查询特性,从而从整体角度指导特性学习。然而,由于外观变化或遮挡在自然图像中很常见,当同一类的目标在查询和支持样本之间变化很大时,整体特征可能会产生误导。此外,当目标的大部分部分由于遮挡而不可见时,局部细节特征的检索变得非常重要,而以前的方法完全忽略了这一点。因此,配备了稠密关系提取模块,可以从支持特征中提取像素级的相关信息。只要存在一些共同的特征,查询特征中属于查询样本和支持样本之间共存目标的像素将被进一步激活,从而提供一种健壮的调制特征,以便于预测类和边界框。

Our distillation method can be seen as an extension of the non-local self-attention mechanism [28, 30]. However, instead of performing self-attention, we specially design the relation distillation model to realize information retrieval from support features to modulate the query feature, which can be treated as a cross attention.

我们的蒸馏方法可以被视为非局部自我注意机制的扩展【28,30】。然而,我们没有进行自注意力机制,而是专门设计了关系蒸馏模型来实现支持特征的信息检索,以调整查询特征,这可以看作是一种交叉注意。

3.2.2 Context-aware Feature Aggregation

After performing dense relation distillation, DRD module has fulfilled its duty. The refined query feature is subsequently fed into RPN where region proposals are output. Taking proposals and feature as input, RoI Align module performs feature extraction for final class prediction and bounding-box regression. Normally, pooling operation is implemented with a fixed resolution 8 in our original implementation, which is likely to cause information loss during training. For general object detection, this kind of information loss can be remedied with large scale of training data, while the problem becomes severe in few-shot detection scenarios with only a few training data available, which is inclined to induce a misleading detection results. Moreover, with scale variation amplified due to the few-shot nature, the model tends to lose the generalization ability to novel classes with adequate adaption to different scales. To this end, we propose Context-aware Feature Aggregation (CFA) module. Instead of using a fixed resolution 8, we empirically choose 4, 8 and 12 three resolutions and perform parallel pooling operation to obtain a more comprehensive feature representation. The larger resolution tends to focus on local detailed context information specially for smaller objects, while the smaller resolution targets at capturing holistic information to benefit the recognition of larger objects, providing a simple and flexible way to alleviate the scale variation problem.

DRD模块在执行了稠密关系蒸馏之后,完成了它的任务。经过优化的查询功能随后被输入到RPN中,在RPN中输出区域建议。RoI Align模块以建议和特征作为输入,进行特征提取,以进行最终的类预测和边界盒回归。通常,在我们最初的实现中,池操作是以固定的分辨率8实现的,这可能会导致培训期间的信息丢失。对于一般的目标检测,这种信息丢失可以通过大规模的训练数据来弥补,而在只有少量训练数据的少数镜头检测场景中,问题变得更加严重,这容易导致误导性的检测结果。此外,由于镜头数量少,尺度变化放大,模型往往会失去对新类别的泛化能力,并对不同尺度有足够的适应能力。为此,我们提出了上下文感知特征聚合(CFA)模块。我们不使用固定分辨率8,而是根据经验选择4、8和12三种分辨率,并执行并行池操作以获得更全面的特征表示。较大的分辨率倾向于专注于局部详细的上下文信息,特别是较小目标的上下文信息,而较小的分辨率旨在捕获整体信息,以利于较大对象的识别,提供了一种简单灵活的方法来缓解尺度变化问题。

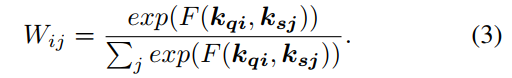

Since each generated feature contains different level of semantic information. With the intention to efficiently aggregate features generated from different scales of RoI pooling, we further propose an attention mechanism to adaptively fuse the pooling results. As illustrated in Fig. 3, we add an attention branch for each feature which consists of two blocks. The first block contains a global average pooling. The second one contains two consecutive fc layers. Afterwards, we add a softmax normalization to the generated weights for balancing the contribution of each feature. Then the final output of the aggregated feature is the weighted summation of the three features.

因为每个生成的特征包含不同级别的语义信息。为了有效地聚合从不同RoI池规模生成的特征,我们进一步提出了一种注意机制来自适应地融合池结果。如图3所示,我们为每个由两个块组成的特征添加了一个注意分支。第一个块包含全局平均池。第二个包含两个连续的fc层。然后,我们对生成的权重添加softmax归一化,以平衡每个特征的贡献。然后,聚合特征的最终输出是三个特征的加权和。

Figure 3. Illustration of context-aware feature aggregation. Attention mechanism is adopted to adaptively aggregate different features, where the weights are normalized with softmax function. 上下文感知功能聚合的图示。采用注意机制自适应地聚合不同的特征,并用softmax函数对权重进行归一化。

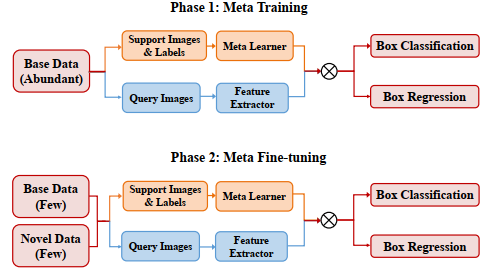

3.3. Learning Strategy

As illustrated in Fig. 4, we follow the training paradigm in [13, 35, 34], which consists of meta-training and meta fine-tuning. In the phase of meta-training, abundant annotated data from base classes is provided. We jointly train the feature extractor, dense relation distillation module, context-aware feature aggregation module and other basic components of detection model. In meta fine-tuning phase, we train the model on both base and novel classes. As only k labeled bounding-boxes are available for the novel classes, to balance between samples from base and novel classes, we also include k boxes for each base class. The training procedure is the same as the meta-training phase but with fewer iterations for model to converge.

如图4所示,我们遵循[13、35、34]中的训练范式,其中包括元训练和元微调。在元训练阶段,提供了丰富的基类注释数据。我们联合训练了特征提取器、稠密关系提取模块、上下文感知特征聚合模块以及检测模型的其他基本组件。在元微调阶段,我们在基类和新类上训练模型。由于只有k个标记的边界框可用于新类,为了平衡基类和新类的样本,我们还为每个基类包含k个框。训练过程与元训练阶段相同,但模型收敛的迭代次数较少。

Figure 4. Demonstration of learning strategy of meta-learning based few-shot detection framework. The meta learner aims to acquire meta information and help the model to generalize to novel classes. 演示了基于元学习的少样本检测框架的学习策略。元学习者的目标是获取元信息,帮助模型推广到新的类别。

4 实验

In this section, we first introduce the implementation details and experimental configurations in Sec. 4.1. Then we present our detailed experimental analysis on PASCAL VOC dataset in Sec. 4.2 together with ablation studies and qualitative results. Finally, results on COCO dataset will be presented in Sec. 4.3.

在本节中,我们首先介绍了第4.1节中的实现细节和实验配置。然后,我们在4.2中对PASCAL VOC数据集进行了详细的实验分析及烧蚀研究和定性结果。最后,COCO数据集的结果将在第4.3节中给出。

4.1. Datasets and Settings

Following the instructions in [13], we construct the fewshot detection datasets for fair comparison with other stateof-the-art methods. Moreover, to achieve a more stable fewshot detection results, we perform 10 random runs with different randomly sampled shots. Hence, all the results in the experiments is averaged results by 10 random runs.

按照【13】中的说明,我们构建fewshot检测数据集,以便与其他最先进的方法进行公平比较。此外,为了获得更稳定的少样本检测结果,我们对不同的随机采样样本执行了10次随机运行。因此,实验中的所有结果均为10次随机运行的平均结果。

PASCAL VOC. For PASCAL VOC dataset, we train our model on the VOC 2007 trainval and VOC 2012 trainval sets and test the model on VOC 2007 test set. The evaluation metric is the mean Average Precision (mAP). Both the trainval sets are split by object categories, where 5 are randomly chosen as novel classes and the left 15 are base classes. We use the same split as [13], where novel classes for four splits are {“bird”, “bus”, “cow”, “motorbike” (“mbike”), “sofa”}, {“aeroplane”(“aero”, “bottle”, “cow”, “horse”, “sofa”}, {“boat”, “cat”, “motorbike”, “sheep”, “sofa”}, respectively. For few-shot object detection experiments, the few-shot dataset consists of images where k object instances are available for each category and k is set as 1/3/5/10.

PASCAL VOC. 对于PASCAL VOC数据集,我们在VOC 2007 trainval和VOC 2012 trainval集上训练我们的模型,并在VOC 2007测试集上测试模型。评估指标为平均精度(mAP)。两个trainval集都是按对象类别划分的,其中5个是随机选择的新类,其余15个是基类。我们使用了与[13]相同的分类,其中四个分类的新类别是 {“bird”, “bus”, “cow”, “motorbike” (“mbike”), “sofa”}, {“aeroplane”(“aero”, “bottle”, “cow”, “horse”, “sofa”}, {“boat”, “cat”, “motorbike”, “sheep”, “sofa”}。对于少样本目标检测实验,少样本数据集由图像组成,其中每个类别有k个目标实例,k设置为1/3/5/10。

COCO. MS COCO dataset has 80 object categories, where the 20 categories overlapped with PASCAL VOC are set to be novel classes. 5000 images from the validation set noted as minival are used for evaluation while the left images in the train and validation set are used for training. The process of constructing few-shot dataset is similar to PASCAL VOC dataset and k is set as 10/30.

COCO MS COCO数据集有80个目标类别,其中与PASCAL VOC重叠的20个类别被设置为新类。验证集中标记为minival的5000幅图像用于评估,而序列和验证集中的左侧图像用于训练。构建少样本数据集的过程类似于PASCAL VOC数据集,k设置为10/30。

Implementation Details. We perform training and testing process on images with a single scale. The shorter side of the query image is resized to 800 pixels and longer sides are less than 1333 pixels while maintaining the aspect ratio. The support image is resized to a squared image of 256 × 256. We adopt ResNet-101 [10] as feature extractor and RoI Align [8] as RoI feature extractor. The weights of the backbone is pre-trained on ImageNet [2]. After training on base classes, only the last fully-connected layer (for classification) is removed and replaced by a new one randomly initialized. It is worth noting that all parts of the model participate in learning process in the second meta fine-tuning phase without any freeze operation. We train our model with a mini-batch size as 4 with 2 GPUs. We utilize the SGD optimizer with the momentum of 0.9, and weight decay of 0.0001. For meta-training on PASCAL VOC, models are trained for 240k, 8k, and 4k iterations with learning rates of 0.005, 0.0005 and 0.00005 respectively. For meta fine-tuning on PASCAL VOC, models are trained for 1300, 400 and 300 iterations with learning rates as 0.005, 0.0005 and 0.00005 respectively. As for MS COCO dataset, during meta-training, models are trained for 56k, 14k and 10k iterations with learning rates of 0.005, 0.0005 and 0.00005 respectively. And during meta fine-tuning, model are trained for 2800, 700 and 500 iteration for 10-shot fine-tuning and 5600, 1400 and 1000 iterations for 30-shot fine-tuning.

实施细节。我们在单尺度图像上执行训练和测试过程。在保持纵横比的情况下,查询图像的短边调整为800像素,长边小于1333像素。支持图像的大小调整为256×256的方形图像。我们采用ResNet-101[10]作为特征提取器,RoI-Align[8]作为RoI特征提取器。主干的权重是在ImageNet上预先训练的[2]。在基类上进行训练后,仅移除最后一个完全连接的层(用于分类),并替换为随机初始化的新层。值得注意的是,模型的所有部分都参与了第二个元微调阶段的学习过程,没有任何冻结操作。我们使用4个GPU和2个GPU的最小批量来训练我们的模型。我们使用动量为0.9、权重衰减为0.0001的SGD优化器。对于PASCAL VOC的元训练,模型分别进行240k、8k和4k迭代的训练,学习率分别为0.005、0.0005和0.00005。对于PASCAL VOC上的元微调,模型分别经过1300、400和300次迭代的训练,学习率分别为0.005、0.0005和0.00005。对于MS COCO数据集,在元训练期间,模型分别接受56k、14k和10k迭代的训练,学习率分别为0.005、0.0005和0.00005。在元微调过程中,对模型进行2800、700和500次迭代的10-shot微调训练,以及5600、1400和1000次迭代的30-shot微调训练。

Baseline Method. Since we adopt Faster-RCNN as base detector, we choose Meta R-CNN [35] as the baseline method. Moreover, we implement it by ourselves for a more fair comparison.

基线方法。由于我们采用Faster RCNN作为基本检测器,因此我们选择Meta R-CNN作为基线方法。此外,我们自己实施,以便进行更公平的比较。

4.2. Experiments on PASCAL VOC

In this section, we conduct experiments on PASCAL VOC dataset. We first compare our method with the stateof-the-art methods. Then we carry out ablation studies to perform comprehensive analysis of the components of our proposed DCNet. Finally, some qualitative results are presented to provide an intuitive view of the validity of our method. For all the experiments, we run 10 trials with random support data and report the averaged performance.

在本节中,我们将在PASCAL VOC数据集上进行实验。我们首先将我们的方法与最先进的方法进行比较。然后,我们进行消融研究,对我们提出的DCNet的组件进行全面分析。最后,给出了一些定性结果,以直观地说明我们方法的有效性。对于所有实验,我们使用随机支持数据进行了10-shot试验,并报告了平均性能。

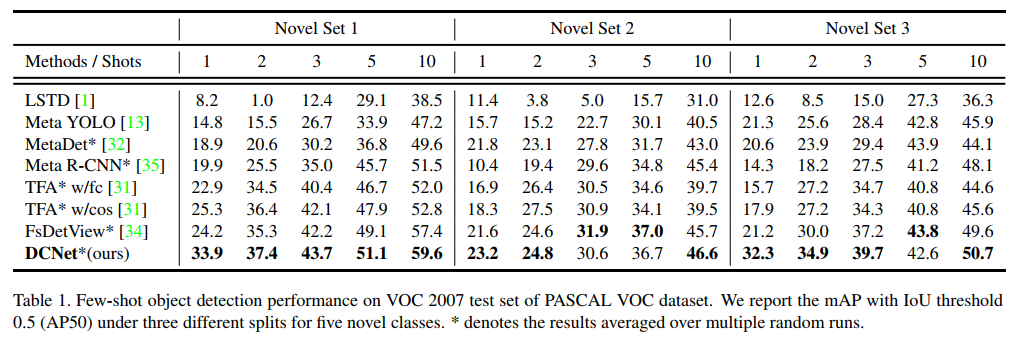

4.2.1 Comparisons with State-of-the-art Methods

In Table 1, we compare our method with former state-ofthe-art methods which mostly report results with multiple random runs. Our proposed DCNet achieves state-of-the-art results on almost all the splits with different shots and outperforms previous methods by a large margin. Specifically, in extremely low-shot settings (i.e. 1-shot), our method outperforms others by about 10% in split 1 and 3, providing a convincing proof that our DCNet is able to capture local detailed information to overcome the variations brought by the randomly sampled training shots.

在表1中,我们将我们的方法与以前最先进的方法进行了比较,这些方法主要报告多个随机运行的结果。我们提出的DCNet在几乎所有具有不同快照的分割上都取得了最先进的结果,并且大大优于以前的方法。具体而言,在极少样本设置(即1-shot)下,我们的方法在1-shot和3-shot中的表现优于其他方法约10%,这提供了一个令人信服的证据,证明我们的DCNet能够捕获局部详细信息,以克服随机抽样训练带来的变化。

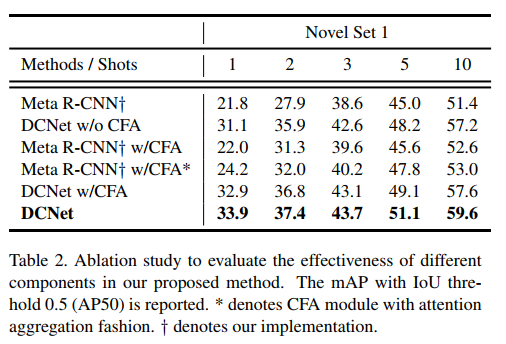

4.2.2 Ablation Study

We present results of comprehensive ablation studies to analyze the effectiveness of various components of the proposed DCNet. All ablation studies are conducted on the PASCAL VOC 2007 test set with the first novel splits. All results are averaged over 10 random runs.

我们展示了综合消融研究的结果,以分析所提议的DCNet的各种组件的有效性。所有消融研究都是在PASCAL VOC 2007测试集上进行的,第一次出现新的拆分。所有结果均为10-shot随机运行的平均值。

Impact of dense relation distillation module. We conduct experiments to validate the superiority of the proposed dense relation distillation (DRD) module. Specifically, we implement the baseline method for meta-learning based few-shot detection Meta R-CNN with class-specific prediction for the final box classification and regression. While the DRD module requires no extra class-specific processing. As shown in line 1 and 2 of Table 2, DCNet w/o CFA equals to Faster R-CNN equipped with DRD module, our proposed DRD module achieves consistent improvement on all novel splits with all shots number, which effectively demonstrates the supremacy of the relation distillation mechanism over the baseline method. Moreover, the improvement over baseline is significant when the shot number is low, which proves that the DRD module successfully exploits useful information from limited support data.

稠密关系蒸馏模块的影响。我们通过实验验证了所提出的稠密关系蒸馏(DRD)模块的优越性。具体而言,我们实现了基于元学习的基本方法,即基于少样本检测的meta R-CNN,并对最终的框分类和回归进行了特定于类的预测。而DRD模块不需要额外的类特定处理。如表2第1行和第2行所示,无CFA的DCNet相当于配备DRD模块的Faster R-CNN,我们提出的DRD模块在所有样本数的所有新拆分上实现了一致的改进,这有效地证明了关系蒸馏机制优于基线方法。此外,当样本数较少时,相对于基线的改善是显著的,这证明DRD模块成功地利用了有限支持数据中的有用信息。

Impact of context-aware feature aggregation module. We carry out experiments to evaluate the validity of the proposed context-aware feature aggregation (CFA) module. Specifically, RoI features generated from parallel branches are aggregated with a simple summation. From line 1 and 3 of the table, with the introduction of CFA module, Meta RCNN achieves notable gains over the baseline. Since CFA module targets at preserving detailed information in a scaleaware manner, different levels of detailed features can be retrieved to assist the prediction process.

上下文感知功能聚合模块的影响。我们进行了实验来评估所提出的上下文感知特征聚合(CFA)模块的有效性。具体来说,从并行分支生成的RoI特征通过简单的求和进行聚合。从表的第1行和第3行开始,随着CFA模块的引入,Meta-RCNN在基线上取得了显著的收益。由于CFA模块的目标是以ScaleWare的方式保存详细信息,因此可以检索不同级别的详细特征以帮助预测过程。

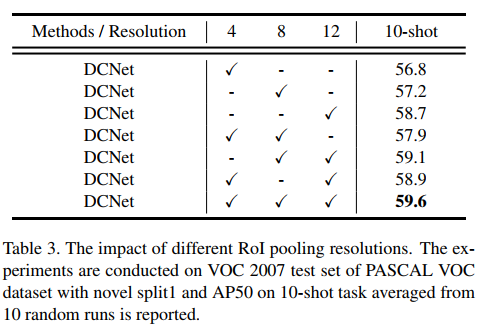

Impact of different RoI pooling resolutions. To further evaluate the impact of different RoI pooling resolutions, we perform explicit experiments to show the detailed performance. As shown in Table 3, solely adopting larger pooling resolution could yield better performance. However, only when aggregating features generated with all three resolutions, the best performance could be obtained.

不同RoI池解决方案的影响。为了进一步评估不同RoI池分辨率的影响,我们进行了明确的实验以显示详细的性能。如表3所示,单独采用更大的池解析可以产生更好的性能。然而,只有在聚合所有三种分辨率生成的特征时,才能获得最佳性能。

Impact of attentive aggregation fashion for CFA module.Based on the plain CFA module, we further propose an attention-based aggregation mechanism to adaptively fuse different RoI features. As presented in line 3 and line 4 of Table 2, the attention aggregation mechanism can further boost the performance of the model, which promotes the plain CFA module with a more comprehensive feature representation, effectively balancing the contributions of each extracted features. Finally, with the combination of DRD module and CFA module, we present DCNet, which achieves the best performance according to Table 2.

专注聚合方式对CFA模块的影响。在普通CFA模块的基础上,我们进一步提出了一种基于注意的聚合机制,以自适应地融合不同的RoI特征。如表2第3行和第4行所示,注意力聚合机制可以进一步提高模型的性能,从而使普通CFA模块具有更全面的特征表示,有效地平衡每个提取特征的贡献。最后,结合DRD模块和CFA模块,我们提出了DCNet,它达到了表2所示的最佳性能。

4.2.3 Qualitative Results

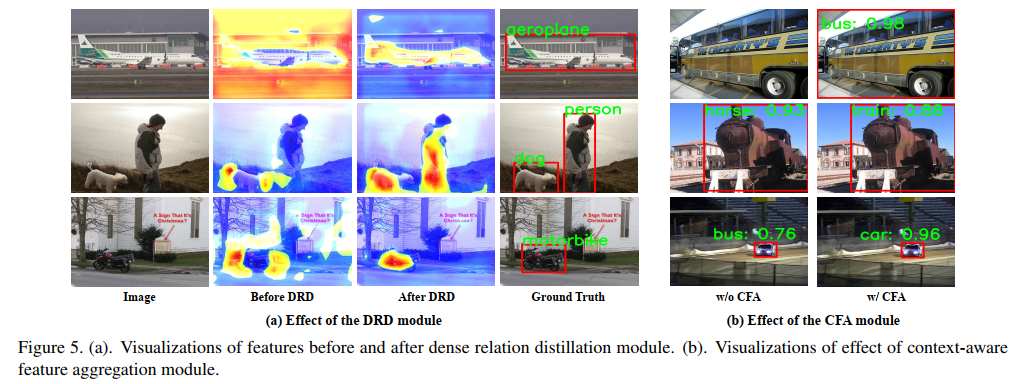

To further comprehend the effect of dense relation distillation (DRD) module, we visualize features before and after DRD module. As shown in Fig. 5 (a), after relation distillation, query features can be activated to facilitate the subsequent detection procedure. Moreover, different from former meta-learning based methods which performs prediction in a class-wise manner, our proposed DRD module can model relations between query and support features in all classes at the same time as shown in the second line of Fig. 5 (a). The DRD module enables the model to focus more on the query objects under the guidance of support information. Additionally, we also visualize the effect of CFA module presented in Fig. 5 (b). With a relatively large or small query object as input, DCNet w/o CFA suffers from false classification or missing detection , while the introduction of CFA module could effectively resolve this issue.

为了进一步理解稠密关系蒸馏(DRD)模块的效果,我们将DRD模块前后的特征可视化。如图5(a)所示,在关系提取之后,可以激活查询特征以便于后续的检测过程。此外,与以前以类方式执行预测的基于元学习的方法不同,我们提出的DRD模块可以同时对所有类中的查询和支持特征之间的关系进行建模,如图5(a)的第二行所示。DRD模块使模型能够在支持信息的指导下更加关注查询目标。此外,我们还可视化了图5(b)中所示的CFA模块的效果。在输入相对较大或较小的查询目标时,没有CFA的DCNet会出现错误分类或检测缺失的问题,而引入CFA模块可以有效解决这一问题。

4.3. Experiments on MS COCO

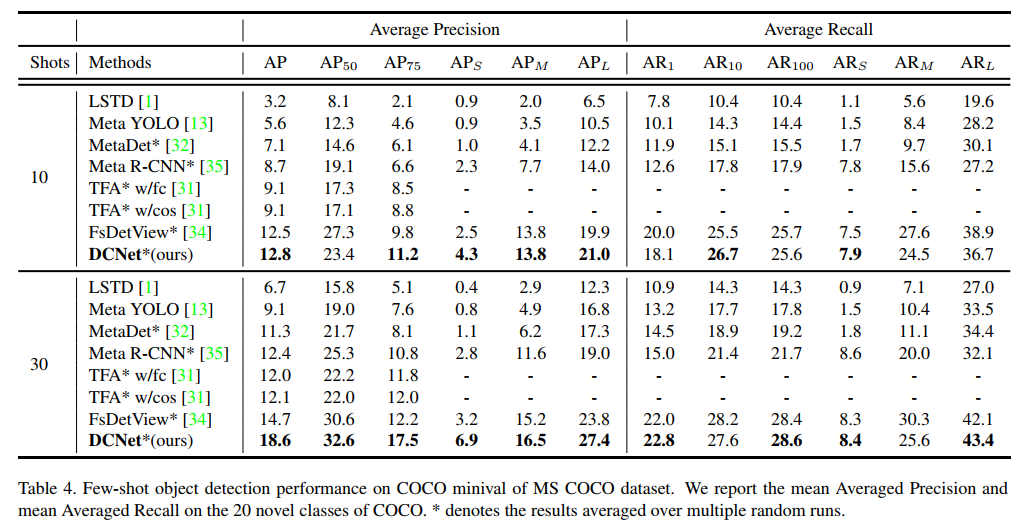

We evaluate 10/30-shot setups on MS COCO benchmark and report the averaged performance with the standard COCO metrics over 10 runs with random shots. The results on novel classes can be seen in Table 4. Despite the challenging nature of COCO dataset with large number of categories, our proposed DCNet achieves state-of-the-art performance on most of the metrics.

我们在MS COCO benchmark上评估了10/30-shot设置,并报告了10-shot的标准COCO指标的平均性能。新类别的结果见表4。尽管COCO数据集具有大量类别的挑战性,但我们提出的DCNet在大多数指标上都达到了最先进的性能。

5 结论

In this paper, we have presented the Dense Relation Distillation Network with Context-aware Aggregation (DCNet) to tackle few-shot object detection problem. Dense relation distillation module adopts dense matching strategy between query and support features to fully exploit support information. Furthermore, context-aware feature aggregation module adaptively harnesses features from different scales to produce a more comprehensive feature representation. The ablation experiments demonstrate the effectiveness of each component of DCNet. Our proposed DCNet achieves stateof-the-art results on two benchmark datasets, i.e. PASCAL VOC and MS COCO

本文提出了基于上下文感知聚合的稠密关系提取网络(DCNet)来解决少样本目标检测问题。稠密关系提取模块在查询和支持特征之间采用稠密匹配策略,充分利用支持信息。此外,上下文感知特征聚合模块自适应地利用来自不同尺度的特征来生成更全面的特征表示。消融实验证明了DCNet各个组件的有效性。我们提出的DCNet在两个基准数据集,即PASCAL VOC和MS COCO上实现了最先进的结果。